Introduction to Particle Swarm Optimization

Particle Swarm Optimization (PSO) is a computational method for optimizing a problem by iteratively improving candidate solutions with regard to a given measure of quality. It was introduced by Dr. Eberhart and Dr. Kennedy in 1995, drawing inspiration from social behaviors observed in birds and fish. The core idea behind PSO is to simulate the social behavior of particles moving through a multi-dimensional space in search of optimal solutions.

In PSO, each candidate solution, referred to as a “particle,” has a position in the solution space that represents a potential solution to the optimization problem. Each particle also has a velocity that determines how it moves within this space. Over time, the particles adjust their positions based on their own experiences and those of their neighbors, effectively searching for the best solution. This collaborative approach mimics the social interaction among members of a swarm, which is crucial for the efficiency of the PSO algorithm.

The significance of particle swarm optimization extends across various fields of computational intelligence and optimization. It has been effectively applied to complex problems, such as function optimization, neural network training, and many real-world applications in engineering, economics, and artificial intelligence. One of the advantages of the PSO algorithm is its ability to converge quickly on a suitable solution without requiring gradient information, making it versatile for diverse optimization scenarios.

Additionally, implementations of the particle swarm optimization algorithm, such as particle swarm optimization in MATLAB and Python, have become increasingly popular among researchers and practitioners. Tools like Python particle swarm optimization (Python PSO) offer accessible libraries that enable users to leverage this powerful optimization method efficiently. This adaptability and effectiveness emphasize PSO’s role as an essential technique in the toolbox of optimization algorithms.

How Particle Swarm Optimization Works

Particle Swarm Optimization (PSO) is an iterative computational method that employs a swarm of particles to navigate the search space in search of optimal solutions. The core of the particle swarm optimization algorithm lies in its population-based approach, where each particle represents a potential solution to the problem at hand. Initially, particles are randomly distributed within the defined search space, each with their own position and velocity. As the algorithm progresses, these particles update their positions based on two primary factors: their previous best-known position and the best-known position of the entire swarm.

At the very heart of the PSO algorithm is the concept of a fitness function, which evaluates the quality of each particle’s solution. This function plays a pivotal role in guiding the search process, as the particles seek to minimize or maximize the fitness score depending on the optimization objective. The velocity of each particle is updated in accordance with both the global best and personal best positions, allowing them to adjust their trajectory towards promising areas of the search space.

As particles continue to evolve through iterations, they engage in a collaborative process; each particle learns from the experiences of its neighbors. This social sharing of information significantly enhances the capability of the particle swarm algorithm to explore diverse regions and facilitate convergence to an optimal solution. The velocity updates are performed using mathematical equations that incorporate coefficients to balance exploration and exploitation. This balance is critical, as it determines whether a particle will explore new regions of the solution space or refine its current path.

Implementing particle swarm optimization in Matlab or Python allows researchers and practitioners to leverage the algorithm effectively to address complex optimization challenges. The flexibility of the particle swarm optimization PSO algorithm makes it suitable for a wide range of applications, from engineering design to machine learning, affirming its significance in contemporary computational optimization. Understanding the mechanics of PSO significantly enhances the ability to apply it effectively in various problem-solving scenarios.

Implementing Particle Swarm Optimization: A Practical Guide

Implementing particle swarm optimization (PSO) can be approached systematically using programming languages such as Python or MATLAB. The following steps provide a foundational guide to help you get started with the particle swarm optimization algorithm.

First, define the problem you wish to solve. This involves selecting the objective function, which the particle swarm algorithm will optimize. A common choice is to use a mathematical function like the Rastrigin or Rosenbrock function, known for their extensive search spaces and challenging local minima.

Next, initialize the parameters for the PSO algorithm. This includes the number of particles, their positions, and velocities. Each particle represents a potential solution, with its position in the search space corresponding to a specific solution to the problem. Initializing the particles randomly within the defined bounds of the objective function ensures adequate coverage of the search area.

Once the particles are initialized, the core of the particle swarm optimization algorithm begins. For every iteration, evaluate the fitness of each particle based on the objective function. The best position discovered by each particle is recorded, along with the best position among the swarm. These best positions guide the velocity and position updates in subsequent iterations, using the formulas:

vi = w * vi + c1 * r1 * (pi - xi) + c2 * r2 * (g - xi)

xi = xi + vi

In Python, libraries like SciPy and NumPy facilitate the implementation of the PSO algorithm, making it easier to handle computations. Alternatively, MATLAB users can leverage built-in functions and toolboxes for efficient PSO application. Code snippets can demonstrate how to structure these updates, iterating until convergence criteria (like a maximum number of iterations or a satisfactory fitness level) are met.

Finally, analyze the results and visualize the progress using graphs or plots. This step is crucial for understanding how effectively the particle swarm optimization algorithm has navigated the search space and optimized the solution. Through practice and iterative refinement of parameters, you can gain further insights into the robust capabilities of particle swarm optimization in various applications.

Need Help in Programming?

I provide freelance expertise in data analysis, machine learning, deep learning, LLMs, regression models, NLP, and numerical methods using Python, R Studio, MATLAB, SQL, Tableau, or Power BI. Feel free to contact me for collaboration or assistance!

Follow on Social

Implementing Particle Swarm Optimization in MATLAB

Setting Up MATLAB for PSO

To effectively implement Particle Swarm Optimization (PSO) in MATLAB, it is essential to ensure that your MATLAB environment is properly configured. First, confirm that you have the latest version of MATLAB installed on your system, as newer versions come with enhanced features and bug fixes that may be beneficial for PSO tasks. Additionally, MATLAB offers a wide array of toolboxes that enhance its computational capabilities, including the Optimization Toolbox, which can substantially improve your PSO implementation.

To install the necessary toolboxes, open MATLAB and navigate to the “Add-Ons” menu found in the top toolbar. From there, select “Get Add-Ons” and search for the Optimization Toolbox, among any other relevant toolboxes that might align with your application needs, such as the Global Optimization Toolbox. Once identified, follow the installation prompts to add these toolkits to your MATLAB environment.

After the toolboxes are installed, it is advisable to configure your workspace settings for optimal performance. Clearing the workspace of any variables that may consume memory unnecessarily can enhance the efficiency of PSO algorithms. Additionally, consider utilizing parallel computing features available in MATLAB, which can significantly speed up computation time, particularly for larger swarm sizes or complex optimization problems. To enable this, go to the “Parallel” tab and set up and configure a parallel pool to distribute computations across multiple cores.

MATLAB Code for Particle Swarm Optimization

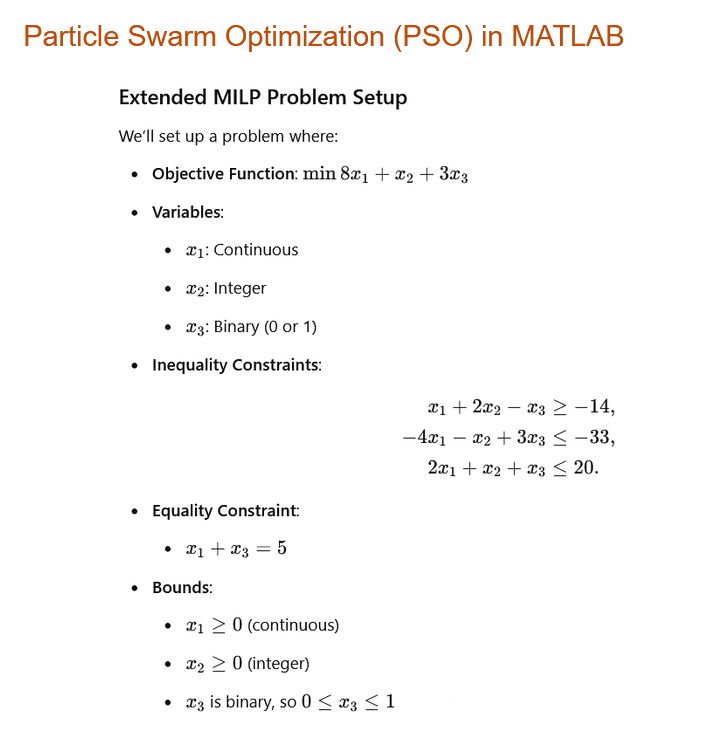

Following is the non linear optimization problem that we are going to solve using Particle Swarm Optimization in MATLAB

clc, clear, close all

% Define the Inequality Constraints

A = [-1, -2, 1;

-4, -1, -3;

2, 1, 1];

b = [14; -33; 20];

% Define the Equality Constraints

Aeq = [1, 0, 1];

beq = 6.5;

% Define Bounds

lb = [0; 0; 0]; % lower bounds for x1, x2, x3

ub = [Inf; Inf; 1]; % x1 continuous, x2 unbounded, x3 binary (0 or 1)

% Set the options for Particle Swarm Optimization

options = optimoptions('particleswarm', ...

'SwarmSize', 20, ... % Number of particles

'MaxIterations', 10, ... % Maximum number of iterations

'Display', 'iter'); % Display optimization progress

- SwarmSize: Specifies the number of particles in the swarm. More particles allow for broader exploration of the search space, but it may require more computation.

- MaxIterations: Defines the maximum number of iterations the algorithm will run. It determines how many cycles of optimization the swarm will go through before stopping, balancing time and solution refinement.

- Display: Controls the level of output shown during the optimization process. Setting it to ‘iter’ displays progress after each iteration, helping you track how the solution is evolving

% Run the Particle Swarm Optimization [x, fval] = particleswarm(@objectiveFunction, 3, lb, ub, options);

% Display Results

disp('Optimal Solution:')

disp(x)

disp('Optimal Objective Function Value:')

disp(fval)

function obj = objectiveFunction(x)

obj = 8 * x(1) + 1 * x(2) + 3 * x(3); % Objective function to minimize

end

Implementing Particle Swarm Optimization in Python

Particle Swarm Optimization (PSO) is a powerful evolutionary optimization algorithm inspired by the collective behavior of birds and fish. It is widely used in solving constrained and unconstrained optimization problems. In this blog, we implement PSO in Python using the pyswarm library and solve an optimization problem with constraints.

Setting Up Python Environment for PSO

To effectively implement particle swarm optimization (PSO) algorithms, having a properly configured Python environment is crucial. This setup ensures that you have all the necessary tools and libraries to develop, test, and run your optimization algorithms efficiently.

Start by installing Python. It is recommended to download the latest version of Python from the official Python website. The installation process is straightforward: download the installer and follow the on-screen instructions. During the installation, ensure that you check the box that says “Add Python to PATH” to simplify command-line interactions.

Next, install essential libraries that will facilitate the development of your PSO algorithms. NumPy, a library for numerical computations, is essential for handling arrays and mathematical functions efficiently. Use the following command in your terminal:

pip install numpy

Install pyswarm library. Use the following command in your terminal:

pip install pyswarm Another critical library is Matplotlib, which is useful for visualizing the results of your optimization processes. To install it, run the command:

pip install matplotlib

Python Code for Particle Swarm Optimization

Particle Swarm Optimization (PSO) is an evolutionary optimization algorithm inspired by the collective behavior of birds and fish. In this tutorial, we implement PSO in Python using the pyswarm library to solve an optimization problem with constraints.

1. Defining the Objective Function

The objective function is the mathematical function we aim to minimize. In this case, we use a linear function:

def objective_function(x):

return 8 * x[0] + 1 * x[1] + 3 * x[2] # Minimize this function

This function represents the cost we want to reduce using PSO.

2. Defining ConstraintsInequality Constraints

The problem has three inequality constraints, ensuring solutions remain feasible:

def inequality_constraints(x):

return np.array([

14 - (-1 * x[0] - 2 * x[1] + 1 * x[2]),

-33 - (-4 * x[0] - 1 * x[1] - 3 * x[2]),

20 - (2 * x[0] + 1 * x[1] + 1 * x[2])

])

Equality Constraint

We enforce an additional constraint where x1 + x3 = 6.5:

def equality_constraint(x):

return 6.5 - (x[0] + x[2])

3. Setting Bounds

Each variable has specific bounds:

x1andx2are non-negative.x3is binary (0 or 1).

lb = [0, 0, 0] # Lower bounds ub = [np.inf, np.inf, 1] # Upper bounds

4. Running the PSO Algorithm

We define the constraints and execute the PSO algorithm:

from pyswarm import pso

constraints = [constraint1, constraint2, constraint3, equality_constraint]

best_x, best_fval = pso(objective_function, lb, ub, f_ieqcons=inequality_constraints, swarmsize=30, maxiter=40)

print("Optimal Solution:", best_x)

print("Optimal Objective Function Value:", best_fval)

The PSO algorithm iterates over particles, updating their positions to find the optimal solution.

5. Visualizing the Optimization Process

To understand how the objective function value evolves, we plot the convergence curve:

import matplotlib.pyplot as plt

import numpy as np

def plot_convergence():

iterations = np.arange(1, 11) # Assuming 10 iterations

function_values = []

for i in iterations:

_, fval = pso(objective_function, lb, ub, f_ieqcons=constraints, swarmsize=20, maxiter=i)

function_values.append(fval)

plt.plot(iterations, function_values, marker='o', linestyle='-', color='b')

plt.xlabel('Iterations')

plt.ylabel('Objective Function Value')

plt.title('PSO Convergence Plot')

plt.grid(True)

plt.show()

plot_convergence()

In this blog, we successfully implemented Particle Swarm Optimization (PSO) in Python to solve a constrained optimization problem. We defined an objective function, imposed constraints, and executed the PSO algorithm. Finally, we visualized the optimization progress using a convergence plot.

PSO is a powerful technique for solving complex optimization problems, and Python provides excellent libraries to implement it effectively.

Compete PSO Code in Python

import numpy as np

import matplotlib.pyplot as plt

from pyswarm import pso

# ===============================

# Objective Function

# ===============================

# Defines the function to be minimized

# The function represents a linear combination of variables

def objective_function(x):

return 8 * x[0] + 1 * x[1] + 3 * x[2] # Minimize this function

# ===============================

# Define the Inequality Constraints

# ===============================

# Constraints ensure that the solution space is restricted

# These functions return positive values when constraints are satisfied

def inequality_constraints(x):

return np.array([

14 - (-1 * x[0] - 2 * x[1] + 1 * x[2]),

-33 - (-4 * x[0] - 1 * x[1] - 3 * x[2]),

20 - (2 * x[0] + 1 * x[1] + 1 * x[2])

])

# ===============================

# Define the Equality Constraint

# ===============================

# This function ensures the equality condition is met

# The constraint x1 + x3 = 6.5 must hold

def equality_constraint(x):

return 6.5 - (x[0] + x[2]) # x1 + x3 = 6.5

# ===============================

# Define Bounds

# ===============================

# Lower and upper bounds restrict the search space

lb = [0, 0, 0] # Lower bounds

ub = [10, 10, 1] # Upper bounds

# ===============================

# Define Constraints List

# ===============================

# List of all constraints that the optimization must satisfy

constraints = [constraint1, constraint2, constraint3, equality_constraint]

# ===============================

# Run Particle Swarm Optimization

# ===============================

# Uses the PSO algorithm to find the optimal solution

best_x, best_fval = pso(objective_function, lb, ub, f_ieqcons=inequality_constraints, swarmsize=30, maxiter=40)

# ===============================

# Display Results

# ===============================

# Shows the best solution and corresponding objective function value

print("Optimal Solution:", best_x)

print("Optimal Objective Function Value:", best_fval)

# ===============================

# Plot the Convergence Curve

# ===============================

# This function visualizes the optimization process over iterations

def plot_convergence():

iterations = np.arange(1, 11) # Assuming 10 iterations

function_values = []

for i in iterations:

_, fval = pso(objective_function, lb, ub, f_ieqcons=constraints, swarmsize=20, maxiter=i)

function_values.append(fval)

plt.plot(iterations, function_values, marker='o', linestyle='-', color='b')

plt.xlabel('Iterations')

plt.ylabel('Objective Function Value')

plt.title('PSO Convergence Plot')

plt.grid(True)

plt.show()

# Call the plot function to visualize results

plot_convergence()

Advantages of Using Particle Swarm Optimization

Particle swarm optimization (PSO) is a well-regarded optimization technique that has gained popularity due to its numerous advantages over other algorithms. One of the primary benefits of the particle swarm optimization algorithm is its inherent simplicity. The foundational concept of PSO is based on the social behavior of organisms, which makes it intuitive and easier to implement. This is particularly advantageous for practitioners working with various optimization problems since it requires minimal tuning compared to more complex algorithms.

Another notable advantage of the particle swarm algorithm is its speed in converging towards optimal solutions. The algorithm efficiently updates estimated solutions through the collaboration between particles, allowing it to quickly navigate the search space. This rapid convergence is especially beneficial in dynamic environments where solutions need to adapt promptly to changing conditions. The speed of the particle swarm optimization PSO algorithm is demonstrated effectively in applications across fields such as engineering, finance, and machine learning.

Moreover, the effectiveness of PSO in finding solutions for diverse types of optimization problems cannot be overstated. The adaptability of particle swarm optimization allows it to handle both continuous and discrete optimization tasks adeptly. This versatility makes it a preferred choice in many scenarios, particularly in environments characterized by complexity and non-linearity. Researchers have successfully employed the particle swarm optimization in MATLAB and Python, showcasing its utility in real-world applications.

The implementation of Python particle swarm optimization or Python PSO libraries also provides further accessibility for data scientists and engineers looking to incorporate this optimization technique into their workflows. Overall, the combination of simplicity, speed, and effectiveness contributes to the growing popularity of particle swarm optimization, reaffirming its position as a leading choice among optimization algorithms.

Applications of Particle Swarm Optimization

Particle Swarm Optimization (PSO) has garnered significant attention in various fields due to its versatility and effectiveness in solving complex optimization problems. One notable area of application is engineering, where PSO is employed for tasks such as optimizing design parameters, enhancing control systems, and refining product quality. For instance, engineers have successfully utilized the particle swarm optimization algorithm to streamline the design of mechanical components, ensuring that performance criteria are met while minimizing costs.

In robotics, the particle swarm algorithm plays a critical role in trajectory planning and multi-robot coordination. By leveraging the PSO framework, robotic systems can efficiently navigate and complete tasks in dynamic environments. One remarkable example includes the use of particle swarm optimization in swarm robotics, where multiple robots collaborate to achieve a common goal, improving task efficiency and adaptability.

The finance sector also benefits from PSO, particularly in portfolio optimization and risk assessment. Investors and analysts utilize the particle swarm optimization pso algorithm to identify optimal asset allocations and minimize risks in uncertain markets. This approach allows for real-time adjustments based on market volatility, ultimately enhancing investment strategies.

Furthermore, artificial intelligence applications are increasingly leveraging PSO for various optimization tasks, including feature selection and neural network training. The integration of particle swarm optimization in machine learning has demonstrated significant improvements in model accuracy and convergence speed. For instance, researchers have effectively combined PSO with neural networks to optimize weights and biases, resulting in enhanced predictive capabilities.

Overall, the diverse applications of particle swarm optimization across engineering, robotics, finance, and artificial intelligence exemplify its versatility as an optimization tool. The continuous exploration of innovative applications in these fields signifies the ongoing relevance and effectiveness of the PSO algorithm in solving real-world challenges.

Comparative Analysis: PSO vs Other Optimization Techniques

Particle swarm optimization (PSO) is a population-based optimization technique inspired by social behavior observed in animals. When comparing PSO to other prominent optimization algorithms, such as genetic algorithms (GA) and simulated annealing (SA), it is essential to understand their respective advantages and limitations.

Genetic algorithms utilize principles of natural selection and genetics to evolve solutions towards an optimal state. One of the strengths of GA lies in its ability to explore a vast search space effectively. However, GAs often require more computational resources and time due to the complexity of genetic operations—crossover and mutation—applied to a population. In contrast, the particle swarm optimization algorithm focuses on simple mathematical operations and communication among particles, leading to faster convergence in many situations, especially in continuous optimization problems.

On the other hand, simulated annealing is inspired by the annealing process in metallurgy. It mimics the cooling of materials to establish a stable state. The strength of SA lies in its capacity to escape local optima due to its probabilistic acceptance of worse solutions during the initial stages; however, it can be sensitive to the cooling schedule used. The particle swarm optimization in MATLAB or its implementation in Python allows for constructive swarm behavior to quickly explore the solution space while reducing the likelihood of being trapped in local optima.

When it comes to practical implementation, both PSO and SA can be simpler to code, especially with libraries available for Python particle swarm optimization. The flexibility of using PSO in various applications, combined with its ease of implementation, makes it a favored choice among practitioners in fields ranging from engineering to machine learning.

Ultimately, selecting the appropriate optimization technique depends on the specific problem characteristics, including dimensionality, computational resources, and desired convergence speed. Understanding the strengths and weaknesses of the particle swarm algorithm compared to GA and SA will enable practitioners to make informed decisions tailored to their optimization needs.

Learn MATLAB with Free Online Tutorials

Explore our MATLAB Online Tutorial, your ultimate guide to mastering MATLAB! his guide caters to both beginners and advanced users, covering everything from fundamental MATLAB concepts to more advanced topics.

Challenges and Limitations of PSO

While Particle Swarm Optimization (PSO) has gained significant attention for its effectiveness across various optimization problems, it is essential to acknowledge its inherent challenges and limitations. One of the most prominent issues faced by the particle swarm algorithm is premature convergence. This phenomenon occurs when the swarm converges to a suboptimal solution before exploring the entire solution space. As a result, the potential of finding a global optimum may be significantly reduced, leading to subpar results.

Another challenge associated with the PSO algorithm involves the issue of local minima. In complex problem domains with multiple peaks or valleys, the algorithm may settle into a local minimum rather than progressing towards the global optimum. This risk of becoming trapped in local minima can deter the algorithm’s efficiency, especially in high-dimensional or nonlinear optimization problems.

Parameter tuning presents yet another layer of complexity within the context of PSO in MATLAB and Python implementations. Key parameters, such as the number of particles, social influence, and cognitive influence coefficients, play a critical role in determining the algorithm’s performance. However, identifying the optimal values for these parameters can be a daunting task, often requiring prior knowledge of the specific problem domain and extensive experimentation. Consequently, users may face difficulties in achieving robust and consistent results, particularly when deploying PSO for various applications.

Finally, while the PSO approach can be relatively simple to implement, its reliance on stochastic processes may result in unpredictable behavior. This inherent randomness can lead to variability in results across multiple runs, further complicating the optimization process. Through a balanced view of these challenges and limitations, it becomes clear that while the particle swarm optimization methodology is a powerful technique, it requires careful consideration and strategies to mitigate potential drawbacks.

MATLAB Assignment Help

If you’re looking for MATLAB assignment help, you’re in the right place! I offer expert assistance with MATLAB homework help, covering everything from basic coding to complex simulations. Whether you need help with a MATLAB assignment, debugging code, or tackling advanced MATLAB assignments, I’ve got you covered.

Why Trust Me?

- Proven Track Record: Thousands of satisfied students have trusted me with their MATLAB projects.

- Experienced Tutor: Taught MATLAB programming to over 1,000 university students worldwide, spanning bachelor’s, master’s, and PhD levels through personalized sessions and academic support

- 100% Confidentiality: Your information is safe with us.

- Money-Back Guarantee: If you’re not satisfied, we offer a full refund

Recent Advances in Particle Swarm Optimization

Particle Swarm Optimization (PSO) has evolved significantly since its inception, with numerous advancements enhancing its functionality and application across various domains. One noteworthy area of development is the introduction of hybrid PSO algorithms that combine the strengths of PSO with other optimization techniques.

These hybrid models aim to overcome the limitations of the traditional particle swarm optimization algorithm, particularly in complex problem spaces. For instance, integrating PSO with genetic algorithms or simulated annealing has shown promising results in improving convergence rates and solution quality.

Another significant advancement is the adaptation of the particle swarm algorithm to tackle multi-objective optimization problems. Researchers have developed Multi-Objective PSO (MOPSO), which facilitates the simultaneous optimization of multiple conflicting objectives. This variant has been particularly beneficial in fields such as engineering design, where multiple criteria must be satisfied. Recent implementations of MOPSO utilize advanced computational frameworks like MATLAB and Python, further streamlining the optimization process and allowing for broader accessibility among researchers and practitioners.

Furthermore, recent studies have focused on improving the robustness of the PSO algorithm under dynamic conditions. Adaptive PSO approaches have been devised, enabling the method to respond better to changes in the optimization landscape, thereby providing more reliable and efficient solutions over time. In practical applications, the integration of particle swarm optimization in Python and its implementation through libraries have made it easier for developers to experiment and leverage the method in real-world scenarios, such as machine learning and artificial intelligence.

In conclusion, as research continues to expand, particle swarm optimization remains a vital area of exploration. The latest advancements demonstrate the algorithm’s versatility and adaptability in solving increasingly complex optimization problems, solidifying its relevance in various scientific and industrial fields.

Future Trends in Particle Swarm Optimization

As technology continues to evolve, the field of optimization is set to undergo significant changes, particularly in the realm of particle swarm optimization (PSO). This optimization technique, inspired by social behavior patterns in natural systems, has proven to be effective in various applications, such as engineering, computer science, and data analysis. Looking ahead, several promising trends are emerging within the landscape of particle swarm optimization.

Firstly, there is an increasing interest in the integration of particle swarm optimization algorithms with other artificial intelligence (AI) techniques. For instance, combining PSO with neural networks can enhance machine learning performance by improving the training process of the models.

This synergy can lead to more robust solutions that capitalize on the strengths of both methodologies. As such, we can see PSO’s adaptability being applied in complex AI systems, further solidifying its relevance in the rapidly advancing digital world.

Secondly, emerging technologies such as the Internet of Things (IoT) and big data present unique challenges that can be addressed using advanced particle swarm optimization techniques. The need for real-time data analysis and decision-making in IoT systems can be optimized through efficient PSO algorithms.

Furthermore, with the increasing complexity of data sets, the demand for sophisticated optimization methods, including the particle swarm optimization in Python and MATLAB implementations, is expected to rise. These implementations will make PSO more accessible and applicable across multiple domains.

Moreover, there is potential for advancements in the theoretical foundations of particle swarm optimization. Researchers are likely to delve deeper into hybrid models and variant algorithms, further exploring the nuances of PSO. These efforts may yield improved convergence rates and better global search capabilities, ultimately enhancing the performance of particle swarm optimization. As we move forward, it is crucial for researchers and practitioners alike to stay abreast of these trends, as they will undoubtedly shape the future of optimization methodologies.